James Cameron on AI: “I warned you guys in 1984 and you didn’t listen”::undefined

What a pompous statement. Stories of AI causing trouble like this predate him by decades. He’s never told an original story, they’re all heavily based on old sci-fi stories. And exactly how were people supposed to “listen”? “Jimmy said we shouldn’t work on AI, we all need to agree as a species to never do that. Thank you for saving us all Prophet Cameron!”

First he warned us about ai and nobody listened then he warned the submarine guy and he didn’t listen. We have to listen to him about the giant blue hippy aliens or we’ll all pay.

In fact, what is happening now sounds a lot more like Colossus: The Forbin Project (came out in 1970) than The Terminator.

No one has told an “original” story.

It’s a self indulgent and totally assinine remark but at least he’s saying something.

One minute the Internet simps for this guy; the next, he’s a hack.

Go figure

One minute the Internet simps for this guy; the next, he’s a hack.

if one person thinks one thing and another person thinks a different thing, that doesn’t make them both hypocrites even if they are both on the internet

Sure, but you can definitely guage the level of popularity of one take over another.

At that point, the one which dominates is obviously going to be the one holds the status quo

Plenty of people have told “original” stories.

My remark was self indulgent and totally as[s]inine, but I’m just saying something too, where’s my pass?

The internet doesn’t act as a single cohesive entity.

Plenty of people have told “original” stories.

Stories that are popular can be legal rip offs from something that is vaguely similar but never gained much exposure. So, what?

“I told a story that the world is familiar with and has sufficient relevance to the issues of today but nobody heeded the warning”

“uhh, yeah, but it wasn’t original”

My remark was self indulgent and totally as[s]inine, but I’m just saying something too, where’s my pass?

Sorry, I guess you probably have made a significant contribution that’s relevant to this glaring issue in society that we’re all trying to come to grips with?

The internet doesn’t act as a single cohesive entity

No, but a significant portion of it does act as a single, cohesive entity. Enough to perpetuate memes into popularity that glorify one and simultaneously villify another.

That’s self evident.

It’s getting old telling people this, but… the AI that we have right now? Isn’t even really AI. It’s certainly not anything like in the movies. It’s just pattern-recognition algorithms. It doesn’t know or understand anything and it has no context. It can’t tell the difference between a truth and a lie, and it doesn’t know what a finger is. It just paints amalgamations of things it’s already seen, or throws together things that seem common to it— with no filter nor sense of “that can’t be correct”.

I’m not saying there’s nothing to be afraid of concerning today’s “AI”, but it’s not comparable to movie/book AI.

Edit: The replies annoy me. It’s just the same thing all over again— everything I said seems to have went right over most peoples’ heads. If you don’t know what today’s “AI” is, then please stop assuming about what it is. Your imagination is way more interesting than what we actually have right now. This is why we should have never called what we have now “AI” in the first place— same reason we should never have called things “black holes”. You take a misnomer and your imagination goes wild, and none of it is factual.

THANK YOU. What we have today is amazing, but there’s still a massive gulf to cross before we arrive at artificial general intelligence.

What we have today is the equivalent of a four-year-old given a whole bunch of physics equations and then being told “hey, can you come up with something that looks like this?” It has no understanding besides “I see squiggly shape in A and squiggly shape in B, so I’ll copy squiggly shape onto C”.

GAI - General Artificial Intelligence is what most people jump too. And, for those wondering, that’s the beginning of the end game type. That’s the kind that will understand context. The ability to ‘think’ on its own with little to no input from humans. What we have now is basically autocorrect on super steroids.

deleted by creator

Not much, because it turns out there’s more to AI than a hypothetical sum of what we already created.

deleted by creator

It’s not about improvement, it’s about actual AI being completely different technology, and working in a completely different way.

That’s not what they said.

What people are calling “AI” today is not AI in the sense of how laypeople understand it. Personally I hate the use of the term in this context and think it would have been much better to stick with Machine Learning (often just ML). Regardless, the point is that you cannot get from these system to what you think of as AI. To get there it would require new, different systems. Or changing these systems so thoroughly as to make them unrecognizable from their origins.

If you put e.g. ChatGPT into a robotic body with sensors… you’d get nothing. It has no concept of a body. No concept of controlling the body. No concept of operating outside of the constraints within which it already operates. You could debate if it has some inhuman concept of language, but that debate is about as far as you can go.

Actual AI in the sense of how we conceive of it at a societal level is something else. It very well may be that many years down the line that historians will look back at the ML advancements happening today as a major building block for the creation of that “true” AI of the future, but as-is they are not the same thing.

To put it another way: what happens if you connect the algorithms controlling a video game NPC to a robotic body? Absolutely nothing. Same deal here.

Not the guy you were referring to, but it’s not so much “improve” as “another paradigm shift is still needed”.

A “robotic body with sensors” has already been around since 1999. But no matter how many sensors, no matter how lifelike and no matter how many machine learning algorithms/LLMs are thrown in, it is still not capable of independent thought. Anything that goes wrong is still due to human error in setting parameters.

To get to a Terminator level intelligence, we need the machine to be capable of independent thought. Comparing independent thought to our current generative AI technology is like comparing a jet plane to a horse drawn carriage - you can call it “advancement”, yes, but there are many intermediate steps that need to happen. Just like an internal combustion engine is the linkage between horse-drawn carriages and planes, some form of independent thought is the link between generative AI and actual intelligent machines.

I really think the only thing to be concerned of is human bad actors with AI and not AI. AI alignment will be significantly easier than human alignment as we are for sure not aligned and it is not even our nature to be aligned.

I’ve had this same thought for decades now ever since I first heard of ai takeover scifi stuff as a kid. Bots just preform set functions. People in control of bots can create mayhem.

The replies annoy me. It’s just the same thing all over again— everything I said seems to have went right over most peoples’ heads.

Not at all.

They just don’t like being told they’re wrong and will attack you instead of learning something.

That type of reductionism isn’t really helpful. You can describe the human brain to also just be pattern recognition algorithms. But doing that many times, at different levels, apparently gets you functional brains.

But his statement isn’t reductionism.

Sounds like you described a baby.

Yeah, I think there’s a little bit more to consciousness and learning than that. Today’s AI doesn’t even recognize objects, it just paints patterns.

I just listened to 2 different takes on AI by true experts and it’s way more than what you’re saying. If the AI doesn’t have good goals programmed in, we’re fucked.It’s also being controlled by huge corporations that decide what those goals are. Judging from the past, this is not good.

You seem to have completely missed the point of my post.

Could you explain to me how?

explain to me

It isn’t AI. It’s just a digital parrot. It just paints up text or images based on things it already saw. It has no understanding, knowledge, or context. Therefore it doesn’t matter how much data you feed it, it won’t be able to put together a poem that doesn’t sound hokey, or digital art where characters don’t have seven fingers or three feet. It doesn’t even understand what objects are and therefore how many of them there should be. They’re just pixels to the tech.

This technology will not be able to guide a robot to “think” and take actions accordingly. It’s just not the right technology— it’s not actually AI.

If the AI doesn’t have good goals programmed in, we’re fucked

When they built a new building at my college they decided to to use “AI” (back when SunOS ruled the world) to determine the most efficient route for the elevator to take.

The parameter they gave it to measure was “how long does each wait to get to their floor”. So it optimized for that and found it could get it down to 0 by never letting anyone get on, so they never got to their floor, so their wait time was unset (which = 0).

They tweaked the parameters to ensure everyone got to their floor and as far as I can tell it worked well. I never had to wait much for an elevator.

If the AI doesn’t have good goals programmed in, we’re fucked.It’s also being controlled by huge corporations that decide what those goals are.

That’s valid, but it has nothing to do with general intelligent machines.

An AI can’t be controlled by corporations, an AI will control corporations.

True but that doesn’t keep it from screwing a lot of things up.

I’m not saying there’s nothing to be afraid of concerning today’s “AI”, but it’s not comparable to movie/book AI.

Yes, sure. I meant things like employment, quality of output

Yes, sure. I meant things like employment, quality of output

That applies to… literally every invention in the world. Cars, automatic doors, rulers, calculators, you name it…

With a crucial difference - inventors of all those knew how the invention worked. Inventors of current AIs do NOT know the actual mechanism how it works. Hence, output is unpredictable.

Lol could you provide a source where the people behind these LLMs say they don’t know how it works?

Did they program it with their eyes closed?

they program it to learn. They can tell you exactly how it learns, but not what it learned (there are some techniques to give some small insights, but not even close to the full picture)

Problem is, how it behaves nepends on how it was programmed and what it learned after being trained. Since what it learned is a black box, we cannot explain their behaviour

Yes I can. example

Opposed to other technology, nobody knows the internal structure. Input A does not necessarily produce output B.

Whether you like it or not is irrelevant.

Regardless of if its true AI or not (I understand its just machine learning) Cameron’s sentiment is still mostly true. The Terminator in the original film wasn’t some digital being with true intelligence, it was just a machine designed with a single goal. There was no reasoning or planning really, just an algorithm that said "get weapons, kill Sarah Connor. It wasn’t far off from an Boston Dynamics robot using machine learning to complete a task.

You don’t understand. Our current AI? Doesn’t know the difference between an object and a painting. Furthermore, everything it perceives is “normal and true”. You give it bad data and suddenly it’s broken. And “giving it bad data” is way easier than it sounds. A “functioning” AI (like a Terminator) requires the ability to “understand” and scrutinize— not just copy what others tell it without any context or understanding, and combine results.

Mate, a bad actor could put today’s LLM, face recognition softwares and functionality into an armed drone, show it a picture of Sara Connor and tell it to go hunting and it would be able to handle the rest. We are just about there. Call it what you want.

LLM stands for Large Language Model. I don’t see how a model to process text is going to match faces out in the field. And either that drone is flying chest-hight, it better recognize people’s hair patterns (balding Sarah Connors beware or wear hats!).

That sure sounds nice in your head.

I dunno, James. Pretty sure Isaac Asimov and Ray Bradbury had more clear warnings years prior to Terminator.

Maybe harlan ellison too

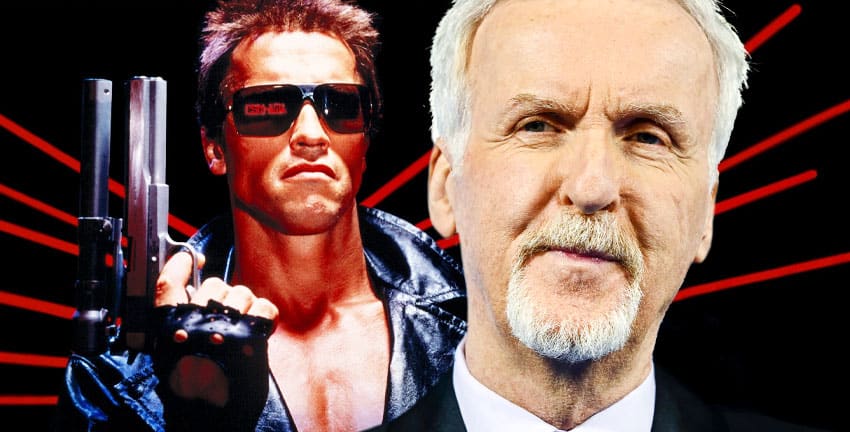

IIRC the original idea for the Terminator was for it to have the appearance of a regular guy on the street, the horror arising from the fact that anyone around you could actually be an emotionless killer.

They ended up getting a 6 foot Austrian behemoth that could barely speak english. One of the greatest films ever made.

An evil 1984 Arnold Schwarzenegger with guns would be terrifying AF even if it wasn’t an AI robot from the future.

Lance Henriksen who ended up playing a cop in The Terminator was originally cast as the Terminator. But then Arnold was brought in and the rest is history.

Maybe as consolation, Cameron went on to cast Lance as the rather helpful android in Aliens.

He seems like the average reddit user raises nose

So is the new trend going to be people who mentioned AI in the past to start acting like they were Nostradamus when warnings of evil AIs gone rogue has been a trope for a long long time?

I’m sick of hearing from James Cameron. This dude needs to go away. He doesn’t know a damn thing about LLMs. It’s ridiculous how many articles have been written about random celebs’ opinions on AI when none of them know shit about it.

He should stick to making shitty Avatar movies and oversharing submarine implosion details with the news media

Here’s the thing. The Terminator movies were a warning against government/army AI. Actually slightly before that I guess wargames was too. But, honestly I’m not worried about military AI taking over.

I think if the military setup an AI, they would have multiple ways to kill it off in seconds. I mean, they would be in a more dangerous position to have an AI “gone wild”. But not because of the movies, but because of how they work, they would have a lot of systems in place to mitigate disaster. Is it possible to go wrong? Yes. Likely? No.

I’m far more worried about the geeky kid that now has access to open source AI that can be retasked. Someone that doesn’t understand the consequences of their actions fully, or at least can’t properly quantify the risks they’re taking. But, is smart enough to make use of these tools to their own end.

Some of you might still be teenagers, but those that aren’t, remember back. Wouldn’t you potentially think it’d be cool to create an auto gpt or some form of adversarial AI with an open ended success criteria that are either implicitly dangerous and/or illegal, or are broad enough to mean the AI will see the easiest path to success is to do dangerous and/or illegal things to reach its goal. You know, for fun. Just to see if it would work.

I’m not convinced the AI is quite there yet to be dangerous, or maybe it is. I’ve honestly not kept close tabs on this. But, when it does reach that level of maturity, a lot of the tools are still open source, they can be modified, any protections removed “for the lols” or “just to see what it can do” and someone without the level of control a government/military entity has could easily lose control of their own AI. That’s what scares me, not a Joshua or Skynet.

The army loves a chain of command. I don’t see this changing with AI. The army just putting AI in the commander’s seat and letting it roll just doesn’t sound credible to me.

The biggest risk of AI at the moment is the same posed by the Industrial Revolution: Many professions will become obsolete, and it might be used as leverage to impose worse living conditions over those who still have jobs.

That’s a real concern. In the long run it will likely backfire. AI needs human input to work. If it starts getting other AI fed as its input, things will start to go bad in a fairly short order. Also, that is another point. Big business is likely another probable source of runaway AI. I trust business use of AI less than anyone else.

There’s also a critical mass to unemployment to which revolution is inevitable. There would likely be UBI and an assured standard of living when we get close to that, and you’d be able to try to make extra money from your passion. I don’t doubt that corporations will happily dump their employees for AI at a moment’s notice once it’s proved out. Big business is extremely predictable in that sense. Zero forward planning beyond the current quarter. But I have some optimism that some common sense would prevail from some source, and they’d not just leave 50%+ of the population to die slowly.

This is really turning out like the ‘satanic panic’ of the 80’s all over again.

The difference being that there was never much proof for the Satanic panic and that now we have actual robot cop dogs patrolling streets.

scene: a scrap yard, full of torn-up cars

in a flash, a square-looking and muscular man appears

he walks into a bar, and when confronted by an angry biker he punches him in the face and steals his clothes & aviator sunglasses

scene: the square-looking man walks into an office and confronts a scared-looking secretary

Square man: I am heyah to write fuhst drafts of movie scripts and make concept aht!

And we were warned about Perceptron in the 1950s. Fact of the matter is, this shit is still just a parlor trick and doesn’t count as “intelligence” in any classical sense whatsoever. Guessing the next word in a sentence because hundreds of millions of examples tell it to isn’t really that amazing. Call me when any of these systems actually comprehend the prompts they’re given.

EXACTLY THIS. it’s a really good parrot and anybody who thinks they can fire all their human staff and replace with ChatGPT is in for a world of hurt.

Not if most their staff were pretty shitty parrots and the job is essentially just parroting…

At first blush, this is one of those things that most people assume is true. But one of the problems here is that a human can comprehend what is being asked in, say, a support ticket. So while an LLM might find a useful prompt and then spit out a reply that may pr may not be correct, a human can actually deeply understand what’s being asked, then select an auto-reply from a drop down menu.

Making things worse for the LLM side of things, that person doesn’t consume absolutely insane amounts of power to be trained to reply. Neither do most of the traditional “chatbot” systems that have been around for 20 years or so. Which begs the question, why use an LLM that is as likely to get something wrong as it is to get it right when existing systems have been honed over decades to get it right almost all of the time?

If the work being undertaken is translating text from one language to another, LLMs do an incredible job. Because guessing the next word based on hundreds of millions of samples is a uniquely good way to guess at translations. And that’s good enough almost all of the time. But asking it to write marketing copy for your newest Widget from WidgetCo? That’s going to take extremely skilled prompt writers, and equally skilled reviewers. So in that case the only thing you’re really saving is the amount of wall clock time for a human to type something. Not really a dramatic savings, TBH.

Guessing the next word in a sentence because hundreds of millions of examples tell it to isn’t really that amazing.

The best and most concise explanation (and critique) of LLMs in the known universe.

James Cameron WOULD make this about James Cameron.

I warned everyone about James Cameron in 1983 and no one listened

The real question is how much time do we have before a Roomba goes goes back in time to kill mother of someone who was littering to much?

How do you know it hasn’t already happened?

ITT: People describing the core component of human consciousness, pattern recognition, as not a big deal because it’s code and not a brain.

The technology is definitely impressive, but some people are jumping the gun by assuming more human-like characteristics in AI than it actually has. It’s not actually able to understand the concepts behind the patterns that it matches.

AI personhood is only selectively used as an argument to justify how their creators feed copyrighted work into it, but even they treat it as a tool, not like something that could potentially achieve consciousness.

So all you do is create phrases based on things you’ve read in the past and recognizing similar interactions between other people and recreating them? 🤔

No we also transfer generic material to similar looking (but not too similar looking) people and then teach those new people the pattern matching.

My point: Reductionism just isn’t useful when discussing intelligence.

Man… I must be smart as heck to be able to come up with my own thoughts then…

Idk man, I’m pretty sure I can find all of those words in a dictionary.

As opposed to what, exactly?