Dear Lemmy.world Community,

Recently posts were made to the AskLemmy community that go against not just our own policies but the basic ethics and morals of humanity as a whole. We acknowledge the gravity of the situation and the impact it may have had on our users. We want to assure you that we take this matter seriously and are committed to making significant improvements to prevent such incidents in the future. Considering I’m reluctant to say exactly what these horrific and repugnant images were, I’m sure you can probably guess what we’ve had to deal with and what some of our users unfortunately had to see. I’ll add the thing we’re talking about in spoilers to the end of the post to spare the hearts and minds of those who don’t know.

Our foremost priority is the safety and well-being of our community members. We understand the need for a swift and effective response to inappropriate content, and we recognize that our current systems, protocols and policies were not adequate. We are immediately taking immediate steps to strengthen our moderation and administrative teams, implementing additional tools, and building enhanced pathways to ensure a more robust and proactive approach to content moderation. Not to mention ensuring ways that these reports are seen more quickly and succinctly by mod and admin teams.

The first step will be limiting the image hosting sites that Lemmy.world will allow. We understand that this can cause frustration for some of our users but we also hope that you can understand the gravity of the situation and why we find it necessary. Not just to protect all of our users from seeing this but also to protect ourselves as a site. That being said we would like input in what image sites we will be whitelisting. While we run a filter over all images uploaded to Lemmy.world itself, this same filter doesn’t apply to other sites which leads to the necessity of us having to whitelist sites.

This is a community made by all of us, not just by the admins. Which leads to the second step. We will be looking for more moderators and community members that live in more diverse time zones. We recognize that at the moment it’s relatively heavily based between Europe and North America and want to strengthen other time zones to limit any delays as much as humanly possible in the future.

We understand that trust is essential, especially when dealing with something as awful as this, and we appreciate your patience as we work diligently to rectify this situation. Our goal is to create an environment where all users feel secure and respected and more importantly safe. Your feedback is crucial to us, and we encourage you to continue sharing your thoughts and concerns.

Every moment is an opportunity to learn and build, even the darkest ones.

Thank you for your understanding.

Sincerely,

The Lemmy.world Administration

spoiler

CSAM

What is the complete correct response for users to carry out if they spot CP?

Is just the report button on the post good enough? Is there some kind of higher level report for bigger-than-just-one-instance shit that threatens Lemmy as a whole? Should we call the FBI or some shit?

I haven’t come across any here, but if I do, I’d like to be able to aid in swift action against not only the post/account in question, but against the actual person running it.

In theory, reporting to the community moderators should be enough for users. It would then be the responsibility of thost moderators to report it to the instance admin, and then the admins responsibility to report it to the instance’s local law enforcement. They will then handle it appropriately.

However, sometimes community moderators are corrupt and will ignore reports and even ban users for reporting instance rule breaking content. In those cases, the user must report directly to the instance admin. As you can imagine, instance admins also can be corrupt and therefore the user must report to law enforcement.

But typically the first scenario is sufficient.

FYI: admins can see all reports. We currently have a tool running that scans for posts that are reported a lot, which will then notify people who can do something about it.

That seems too slow. Sibling comment by admin is reassuring here.

deleted by creator

You can always report casm to the FBI. You don’t need a mod’s guidance to do so.

Otherwise report and block.

Report and block should be a correct respond.

Edit: actually also report the user to the instance admin where the user from.

Thanks admins, for taking this seriously.

Take care of yourselves as well. The images sound horrific.

I think lemmy should allow the moderators to allow only text as posts in their communities, this way people would not be able to troll with images or videos in text-only communities.

It’s not the best solution, but it’s perfect for AskLemmy.

Maybe this website could use karma as a way to allow people to post images and/or videos. 100 general karma for pictures, 200 for videos, I don’t know.

It’s just a matter of being creative to avoid this kind of content.

There are lots of ideas from people, but unfortunately Lemmy software doesn’t actually support most of them. And in terms of adding support to Lemmy, well there are just two devs plus community support so if people have the skills they may want to consider contributing code to implement some of these things.

Edit: just adding that some instances are building bots (kinda like an automod) but with 1,000+ instances we kinda need something built in

I think that’s at least partially why that Sublinks fork is starting. It’s in Java so the number of people capable of helping is much, much larger.

And while I’m excited to see how that turns out, I’ve got some reservations, particularly about some of the “moderation tools” being suggested, but if it breathes new life and excitement into a fediverse Reddit replacement, that’s a good thing.

The cool thing about ActivityPub is that we don’t have to pick one. Some instances run Kbin, some Mbin, some Lemmy. Some can run Sublinks and everyone gets to interact with each other.

The way federation works, instances can’t force their stuff on other instances. If a post on a community on your instance is removed by an admin of a different instance, then you’ll still see it on yours and they won’t see it on theirs (the exception is if the post is in a community where a moderator of that community or admin of that instance removes it, then it removes it for everyone - though Lemmy still has some quirks in regards to that), so different instances can have different moderation policies and you join one that matches the moderation policy you want.

If people want to help they can contribute to lemmy directly, or write moderation tools in other languages. It won’t help anyone to spend 8+ man years of development only to reach feature parity.

Ah yes, the open source way

Especially make newer accounts text only, then there should be a new idea:

Image posts should not be federated for hours, maybe even a day. That would limit the spread, and provide local mods time to detect and remove content.

Well thanks for the spoiler thing, but I don’t even know what the acronym (is it even an acronym?) means anyway and now I’m too afraid to do a web search for it 😅

Well, maybe it’s better that way.

It’s safe to look things up!

Looking up the name of a crime does not mean that you’re doing that crime.

If you look up “bank robbery” that doesn’t make you guilty of bank robbery. It doesn’t even mean you’re trying to rob a bank, or even want to rob a bank. You could want to know how bank robbers work. You could be interested in being a bank guard or security engineer. You could be thinking of writing a heist story. You could want to know how safe your money is in a bank: do they get robbed all the time, or not?

Please, folks, don’t be afraid to look up words. That’s how you learn stuff.

To be fair… Also how you end up on a list.

::: spoiler CSAM is child sexual abuse material I believe. So yeah, better not to look it up :::

I’m pretty convinced the initialism was created so that people could Google it in an academic context without The Watchers thinking they were looking for the actual content.

You may be correct although it seems like pretty dumb reasoning. I doubt any of those cretins would search the words “child sexual abuse material.” That would require acknowledging the abuse part of it.

I think you may have misunderstood. The entire point is to have an academic term that would never be used as a search by one of those inhuman lowlifes.

I don’t mean to be pedantic, so I hope my meaning came across well enough…

I think my point is that the acronym exists because of the search term, not the other way around. And it’s pretty laughable that the academic term has to be distilled down to an acronym because it is otherwise considered a trigger word.

They already use codewords when chatting about it. I forget what the more advanced ones were beyond “CP”, but there’s a Darknet Diaries episode or two that go over it. In particular, the one about Kik. He interviews a guy who used to trade it on that platform.

CSAM is meant to differentiate between child porn, which also includes things like cartoons of children, with actual children being abused. Law enforcement have limited resources so they want to focus on where they can do the most good.

The initialism was created to focus the efforts of law enforcement. They have limited resources, so they want to address actual children being abused, rather than Japanese cartoons. Both are child porn, but CSAM involves real children.

Your spoiler didn’t work, apparently you need to write spoiler twice.

The second “spoiler” is actually text for the spoiler.

This is customisable text

::: spoiler This is customisable textTy, buy it still seems like you need something there - at least for some apps.

Yes, I don’t think it works with nothing there. It needs something, but it can be pretty much any text (so long as the first word is spoiler)

Works in my app :P I really wish stuff like this were more standardized across the platform. Not really much point in spoilering it now since everyone is chatting about it.

thanks for the info!

I’ll just say illegal content involving minors.

Thank you for the transparency! Much appreciation to the volunteers who have had to unfortunately handle this.

That being said we would like input in what image sites we will be whitelisting

Although I am from another instance I did want to suggest some common hosts, while I use Imgbox personally I often see Imgur and Catbox used quite a bit.

Catbox actually appears to actually be a generic temporary file host though, rather than a dedicated image host

Content moderation is a never-ending battle It gets difficult to enforce the larger your user base becomes. I really hope we can keep this community safe

This is exactly what happened in various other “alt-reddit” sites that I was a part of. CSAM is uploaded as a tactic so that they can then contact the registrar of said website and claim that this site is hosting said CSAM.

It’s basically a sign of the site becoming more popular and pissing off the larger players.

Yeah we got some request like that and even for a announcement of ours. But we are planning to reduce them.

But how would they even get the CSAM in the first place? It’s not like everyone just has pictures of children being sexually abused?

We aren’t talking about upstanding citizens here

I’m glad I missed that, but sorry anyone had to see that.

I hope you will consider whitelisting https://imgbb.com/

It works well with Lemmy posts because it allows direct image linking.

If you’re hesitant to whitelist that site, then I hope you will allow https://postimages.org/

The drawback to the latter site is it’s ad-ridden (not a problem with uBO).

Both are superior image sharing sites to imgur, IMHO.

I use postimages every day so I hope it will be whitelisted.

I recently switched to imgbb after someone without an adblocker showed me a screenshot of one of my posts, but in my opinion everyone should be using uBlock Origin anyway, so I’d be ok with either option. They’re both extremely good image sharing sites.

The trouble is not everyone has an easy way to use an ad blocker - in particular iPhone users.

I mean, they should really just get an Android phone and install Firefox (or a hardened fork) then uBO, but try telling them that.

Hyperweb - my Safari iOS savior, supporting Adblock Plus (thus uBlock Origin) filterlists

Yeah but you’re still not getting an Android with hardened Firefox :p

Also that sounds like you have to manually add the lists, you can get uBO proprietary lists from github but I think most people would miss those.

They come with a number pre-added, but I’ve been meaning to copy lists from the uBO GitHub!

Yeah it’s the proprietary lists that handle YouTube I think.

Might be some Pixelfed instances worth adding to the list. I’ve been using pixelfed.de which has its community guidelines here. Haven’t had to report anything yet but the process to do so is easy (went through the first couple clicks and hit cancel) and it looks like they’ve got a way to flag posts for site admin review, not just mods. Seems like a good system to keep that sort of content under control and, though I haven’t looked through their documentation and code to confirm, I would be surprised if they didn’t have something automated too.

Thank you for doing unpleasant work like this when it comes up.

There are a lot of PixelFed instances, this might get complicated.

I’d ask for https://miniature.photography but I’m one of only a dozen or so people who post there.

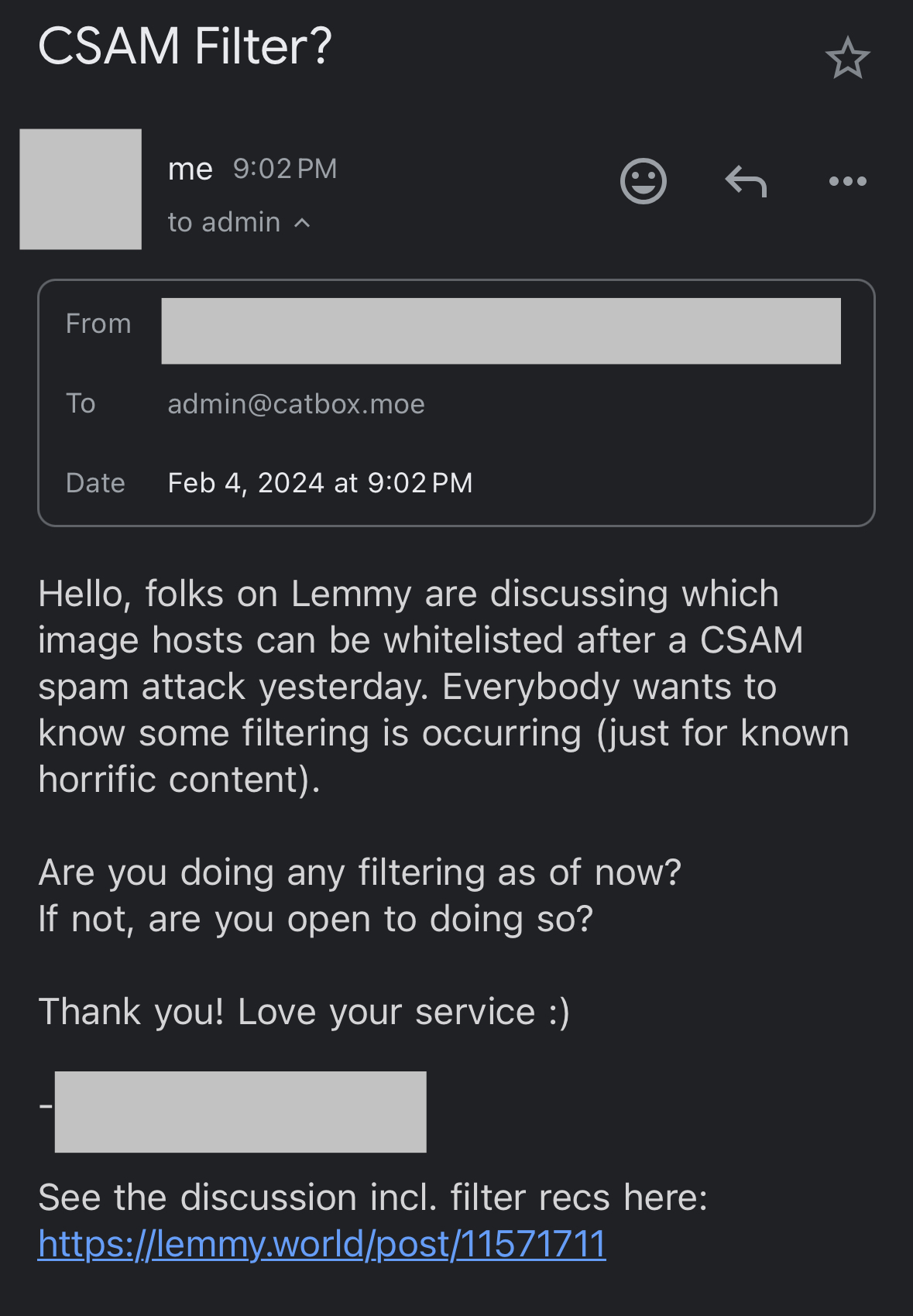

Lots of posts on the site come from files.catbox.moe and litterbox.catbox.moe – so I’d probably leave those whitelisted. He has terms against gore, csam, etc - but I don’t know if he has any kind of active filtration. It’s just a dude running a site; but often times it’s just really easy to upload there.

You guys should use this if possible: https://blog.cloudflare.com/the-csam-scanning-tool

Just FYI, this tool seems to only be available if you live in the US. I tried to apply for my own instance, located in Europe, and my request was denied after a lengthy and frankly tiresome “verification process”. This sucks.

This can only be activated when you are in the USA! So a no go for us.

I’ve also noticed many links are to catbox but… Am I the only one who can’t ever load those? I’ve never actually had a link to that host work.

It’s sporadic for me. Sometimes it works and sometimes it doesn’t. I don’t exactly change anything either and it doesn’t seem to be traffic based.

Is that why half the images on this site fail to load for me?

Reached out:

Any response?

No, just bumped the email chain thanks to you :)

Catbox admin should be given one month to implement one of the several free CSAM filters available. If they have not done so in a month, it’s clear they’re not a safe resource.

As another avenue to the report button, could Lemmy world have a new community LemmyWorldSupport_911 where anyone can post and it’s monitored by moderators or admins and they can promptly react?. (911 =American number for emergency services)

As part of the community rules, posting for stuff that doesn’t warrant a 911 reaction could result an penalties for anyone looking to “prank” it or abuse it’s intended purpose.

Some small communities only have one mod and that mod has to sleep.

(911 =American number for emergency services)

Could also do 112, since Lemmy.world is hosted in Europe :)

In Aus, we have 000, triple zero. I think 112 works here too, might just be mobiles though. It used to be triple oh, but some people saw the keypads had letters on them and dialled 777 or whatever number o was on.

I’ve probably seen too much American media. I’m happy for any, even 0118 999 881 999 119 725 … 3 but it might be a bit hard to learn.

Or 912 bc WE DOOOO! WE DOOOO!

I’m planning on making something like that, but am first focussing on improving the features we already have.

Do we know the site they used to host the CSAM? Can’t we try and get whoever runs those servers to get this troll. Cause if they got one CSAM I bet they have a bunch. Maybe the FBI can raid them and help save some kids from these monsters.

From context clues it seems like they were hosted on Lemmy which is why they are now limiting image hosting.

Afaik after the first time this happened lemmy.world added content scanning, but from this post it sounds like the images were on an external hosting site and couldn’t be scanned.

deleted by creator

Damn, this broken record again? That sucks, and I’m sorry you had to see that.

Great contribution to the discussion

I dunno, I was just kind of disappointed that this kind of crap is still going on. Like, I was hoping for something more interesting, in a way, rather than the same old pathetic trolling.

Right next to the same old pathetic whining. Peas in a pod!

You’d fit in better back on reddit.

You’d think, but when I called out zero-content comments there, they reacted just like you are. Damned if I do, damned if I don’t 🤷♂️

I made a simple comment showing my support to the admin, which after this experience I’m sure is much needed. You’ve come in here behaving like an ass with zero effort yourself - you were wrong, my comments have had far more value to the discussion than anything you’ve brought. I don’t think the problem is the platform, I think the problem is you.

🤣🤣🤣

Damn, this broken record again? That sucks, and I’m sorry you had to see that.

Woah, check out that value loaded comment

my comments have had far more value to the discussion

🤣🤣🤣

That being said we would like input in what image sites we will be whitelisting.

I’d like to suggest postimages(dot)org. I’ve been using that site since leaving reddit/imgur over the summer. They seem to be a good free service (although they do offer a premium tier) and according to their ‘about us’ section they’ve been operating for 20 years.

As one of the smaller instance admins who had to deal with the content… one of the image host was postimg.cc, so I’m not sure how fast they take down the content or if they run some kind of filtering.

Well that’s disturbing to hear. I figured if they’ve been around that long they’d have worked out effective tools to combat that stuff. But I suppose that might be a naive assumption, it must be a constant issue for image hosting services.

You have also implemented a ban on any contribution from a VPN without announcing it. It has been very annoying to figure out.

Please do announce these kind of changes and limitations you implement publicly unless you absolutely cannot.